Multi-label image classification Tutorial with Keras ImageDataGenerator

Tutorial on using Keras for Multi-label image classification using flow_from_dataframe both with and without Multi-output model.

PREREQUISITE:

To use the flow_from_dataframe function, you would need pandas installed.

You could do that by pip install pandas

Note: Make sure you’re using the latest keras-preprocessing library by installing it directly from the Github repo. It contains many changes from the one that resides under keras.preprocessing.

Make sure you uninstall the older keras-preprocessing that is installed when you’re installing keras by executing the command

pip uninstall keras-preprocessingand install the keras preprocessing library by executing the command

pip install git+https://github.com/keras-team/keras-preprocessing.gitAnd finally, restart the kernel if needed.

Let's get started!

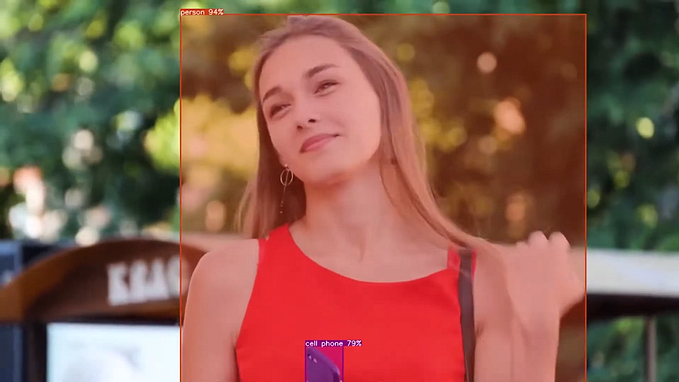

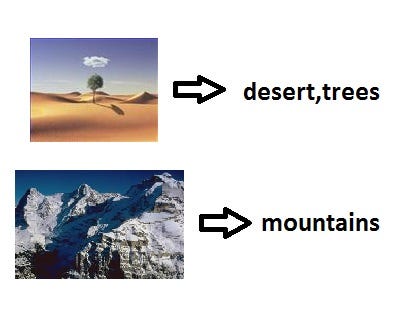

I will be using the dataset that I found here, which contains 2000 images belonging to various combinations of 5 labels (‘desert’, ‘mountains’, ‘sea’, ‘sunset’, ‘trees’). I have preprocessed the dataset to suit this example. You can download it from here.

Import all the stuff needed and read the CSV file with pandas.

from keras.models import Sequential"""Import from keras_preprocessing not from keras.preprocessing, because Keras may or maynot contain the features discussed here depending upon when you read this article, until the keras_preprocessed library is updated in Keras use the github version."""from keras_preprocessing.image import ImageDataGenerator

from keras.layers import Dense, Activation, Flatten, Dropout, BatchNormalization

from keras.layers import Conv2D, MaxPooling2D

from keras import regularizers, optimizers

import pandas as pd

import numpy as np

There are two formats that you can use the flow_from_dataframe function from ImageDataGenerator to handle the Multi-Label output problem.

Format 1:

The DataFrame has the following format:

No processing is needed further the flow_from_dataframe can handle this case out-of-the-box.

df=pd.read_csv(“./miml_dataset/miml_labels_1.csv”)columns=["desert", "mountains", "sea", "sunset", "trees"]datagen=ImageDataGenerator(rescale=1./255.)

test_datagen=ImageDataGenerator(rescale=1./255.)train_generator=datagen.flow_from_dataframe(

dataframe=df[:1800],

directory="./miml_dataset/images",

x_col="Filenames",

y_col=columns,

batch_size=32,

seed=42,

shuffle=True,

class_mode="raw",

target_size=(100,100))valid_generator=test_datagen.flow_from_dataframe(

dataframe=df[1800:1900],

directory="./miml_dataset/images",

x_col="Filenames",

y_col=columns,

batch_size=32,

seed=42,

shuffle=True,

class_mode="raw",

target_size=(100,100))test_generator=test_datagen.flow_from_dataframe(

dataframe=df[1900:],

directory="./miml_dataset/images",

x_col="Filenames",

batch_size=1,

seed=42,

shuffle=False,

class_mode=None,

target_size=(100,100))

Format 2:

The DataFrame has the following format:

If the dataset is formatted this way, In order to tell the flow_from_dataframe function that “desert,mountains” is not a single class name but 2 class names separated by a comma, you need to convert each entry in the “labels” column to a list(not necessary to convert single labels to a list of length 1 along with entries that contain more than 1 label, but it’s good to maintain everything as a list anyway).

df = pd.read_csv("./miml_dataset/miml_labels_2.csv")

df["labels"]=df["labels"].apply(lambda x:x.split(","))

datagen=ImageDataGenerator(rescale=1./255.)

test_datagen=ImageDataGenerator(rescale=1./255.)train_generator=datagen.flow_from_dataframe(

dataframe=df[:1800],

directory="./miml_dataset/images",

x_col="Filenames",

y_col="labels",

batch_size=32,

seed=42,

shuffle=True,

class_mode="categorical",

classes=["desert", "mountains", "sea", "sunset", "trees"],

target_size=(100,100))valid_generator=test_datagen.flow_from_dataframe(

dataframe=df[1800:1900],

directory="./miml_dataset/images",

x_col="Filenames",

y_col="labels",

batch_size=32,

seed=42,

shuffle=True,

class_mode="categorical",

classes=["desert", "mountains", "sea", "sunset", "trees"],

target_size=(100,100))test_generator=test_datagen.flow_from_dataframe(

dataframe=df[1900:],

directory="./miml_dataset/images",

x_col="Filenames",

batch_size=1,

seed=42,

shuffle=False,

class_mode=None,

target_size=(100,100))

You would have noticed I have manually set classes to a list containing all the class names(can be a list of any strings that represent the class names), I did this because the dataset is small in size and some classes may have been lost while splitting the dataframe. Usually, this is not necessary unless your dataset is very small, this problem doesn’t arise if the dataset follows Format 1.

Caution: Datasets that follow Format 1 may require more memory to read and hold the dataframe in memory depending upon the no. of classes and rows in the dataframe.

Build the model:

model = Sequential()

model.add(Conv2D(32, (3, 3), padding='same',

input_shape=(100,100,3)))

model.add(Activation('relu'))

model.add(Conv2D(32, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))model.add(Conv2D(64, (3, 3), padding='same'))

model.add(Activation('relu'))

model.add(Conv2D(64, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))model.add(Flatten())

model.add(Dense(512))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(5, activation='sigmoid'))

model.compile(optimizers.rmsprop(lr=0.0001, decay=1e-6),loss="binary_crossentropy",metrics=["accuracy"])

Some might want to use separate loss functions for each output instead of since Dense layer with 5 units, Scroll down to see how to use Multi-Output Model.

Why “binary_crossentropy” as the loss function and “sigmoid” as the final layer activation?

Refer to this thread it includes many articles and discussions related to this.

Fitting the Model:

STEP_SIZE_TRAIN=train_generator.n//train_generator.batch_size

STEP_SIZE_VALID=valid_generator.n//valid_generator.batch_size

STEP_SIZE_TEST=test_generator.n//test_generator.batch_size

model.fit_generator(generator=train_generator,

steps_per_epoch=STEP_SIZE_TRAIN,

validation_data=valid_generator,

validation_steps=STEP_SIZE_VALID,

epochs=10

)Predict the output

test_generator.reset()

pred=model.predict_generator(test_generator,

steps=STEP_SIZE_TEST,

verbose=1)- You need to reset the test_generator before every time you call the predict_generator. This is important, if you forget to reset the test_generator you will get outputs in a weird order.

Generating output files always depends upon your need. Anyway, I have included the code for a generic situation. The below code is just for reference, might not be optimal or what you want.

pred_bool = (pred >0.5)Output in format 1:

predictions = pred_bool.astype(int)

columns=["desert", "mountains", "sea", "sunset", "trees"]

#columns should be the same order of y_colresults=pd.DataFrame(predictions, columns=columns)

results["Filenames"]=test_generator.filenames

ordered_cols=["Filenames"]+columns

results=results[ordered_cols]#To get the same column order

results.to_csv("results.csv",index=False)

Output in format 2:

predictions=[]

labels = train_generator.class_indices

labels = dict((v,k) for k,v in labels.items())

for row in pred_bool:

l=[]

for index,cls in enumerate(row):

if cls:

l.append(label[index])

predictions.append(",".join(l))

filenames=test_generator.filenames

results=pd.DataFrame({"Filename":filenames,

"Predictions":predictions})

results.to_csv("results.csv",index=False)Multi-label classification with a Multi-Output Model

Here I will show you how to use multiple outputs instead of a single Dense layer with n_class no. of units.

Everything from reading the dataframe to writing the generator functions is the same as the normal case which I have discussed above in the article. The only change we need to make here is to use Keras’s Function API instead of the Sequential API because it doesn’t support multiple outputs and an extra wrapper function to return the target label array in the expected format.

inp = Input(shape = (100,100,3))

x = Conv2D(32, (3, 3), padding = 'same')(inp)

x = Activation('relu')(x)

x = Conv2D(32, (3, 3))(x)

x = Activation('relu')(x)

x = MaxPooling2D(pool_size = (2, 2))(x)

x = Dropout(0.25)(x)

x = Conv2D(64, (3, 3), padding = 'same')(x)

x = Activation('relu')(x)

x = Conv2D(64, (3, 3))(x)

x = Activation('relu')(x)

x = MaxPooling2D(pool_size = (2, 2))(x)

x = Dropout(0.25)(x)

x = Flatten()(x)

x = Dense(512)(x)

x = Activation('relu')(x)

x = Dropout(0.5)(x)

output1 = Dense(1, activation = 'sigmoid')(x)

output2 = Dense(1, activation = 'sigmoid')(x)

output3 = Dense(1, activation = 'sigmoid')(x)

output4 = Dense(1, activation = 'sigmoid')(x)

output5 = Dense(1, activation = 'sigmoid')(x)

model = Model(inp,[output1,output2,output3,output4,output5])

model.compile(optimizers.rmsprop(lr = 0.0001, decay = 1e-6),

loss = ["binary_crossentropy","binary_crossentropy", "binary_crossentropy","binary_crossentropy", "binary_crossentropy"],metrics = ["accuracy"])Now that this model has 5 outputs instead of 1, Each output needs its own loss function. You can pass a list of loss functions that will be applied to corresponding outputs in the order they are given to the model. If you name the output layers, you can even pass a dictionary instead of a list. In this case, the keys will be the names of the output layers and the values will be the loss functions.

We now have the model that has 5 output layers but our train_generator and valid_generator output a single array for the target labels, to handle this we need to write a Python generator function that takes train_generator or valid_generator as input and yields a tuple containing the images and a list containing 5(No. of outputs) arrays for the target labels.

def generator_wrapper(generator):

for batch_x,batch_y in generator:

yield (batch_x,[batch_y[:,i] for i in range(5)])Now you can train the model!

STEP_SIZE_TRAIN=train_generator.n//train_generator.batch_size

STEP_SIZE_VALID=valid_generator.n//valid_generator.batch_size

STEP_SIZE_TEST=test_generator.n//test_generator.batch_size

model.fit_generator(generator=generator_wrapper(train_generator),

steps_per_epoch=STEP_SIZE_TRAIN)

validation_data=generator_wrapper(valid_generator),

validation_steps=STEP_SIZE_VALID,

epochs=1,verbose=2

)The prediction is the same as before.

test_generator.reset()

pred=model.predict_generator(test_generator,

steps=STEP_SIZE_TEST,

verbose=1)